Amid the rapid rise in demand for global artificial intelligence (AI) computing power and efficiency, NVIDIA (NVIDIA), a major GPU manufacturer, recently released two planned era innovative technologies, including a new generation of Rubin CPX GPU designed for large-scale context processing, and NVIDIA MGX PCIe Switch Board with ConnectX-8 SuperNICs that will become the key foundation of its system. The combination of the two indicates that AI computing is moving towards a new era of high efficiency, high efficiency and high expansion.

The core mission of NVIDIARubin CPX GPU is to break through the bottleneck of AI system in "long situation" recommendation. As AI models gradually grow, the need to process millions of verbs is becoming more common. Whether it is large software project analysis, long-form document understanding, or video generation of an hour-long period, it all challenges traditional GPU structure. Rubin CPX breaks the limit with a new design, and can integrate video decoders, coders and long-situation recommendation processing on a single chip, providing unprecedented speed and performance.

▲ Optimize inference by keeping GPU functionality consistent with context and generation work loads (Image source: Nvidia)

Imagine that AI is going to handle a document that is as thick as millions of words, analyze a large software project, or generate a video that lasts an hour, which is a huge challenge for traditional GPUs. But the Rubin CPX GPU was born to break this bottle!

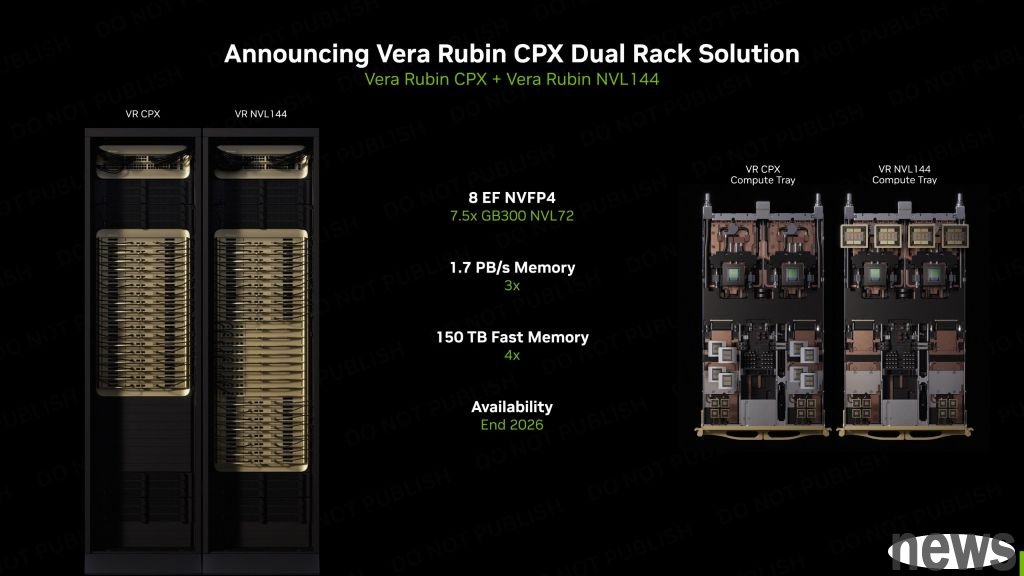

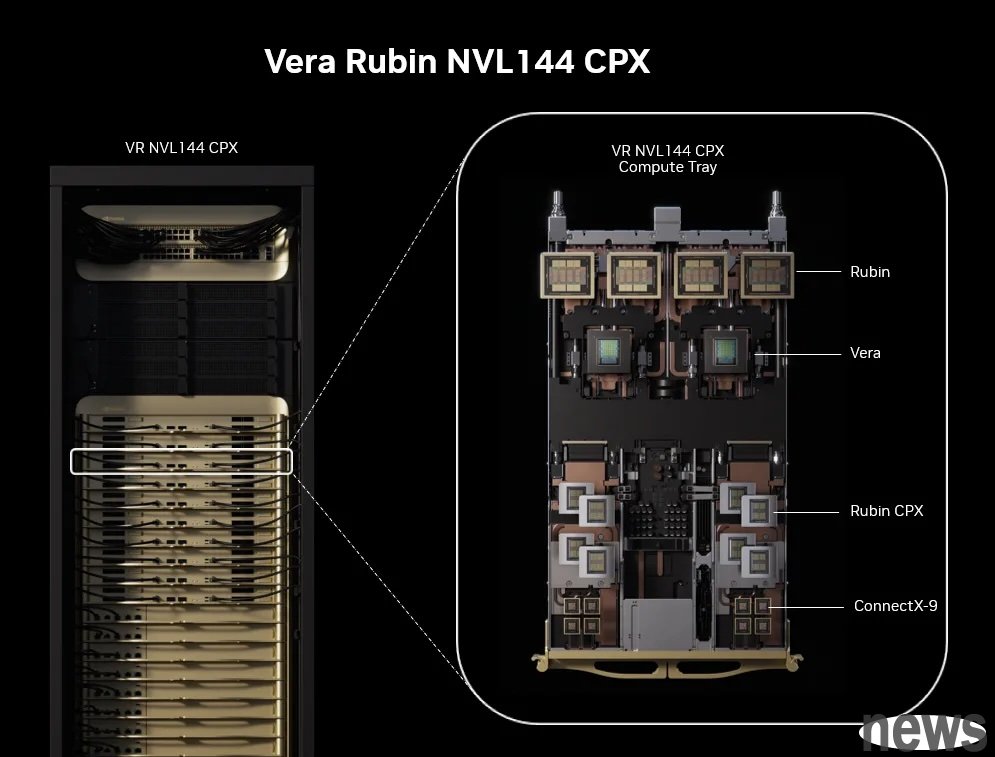

Rubin CPX GPU is designed for the "Long Edition" AI recommendations, and its effectiveness is 7.5 times higher than that of existing systemsIn this regard, NVIDIA founder and executive director Huang Ren-jin pointed out that Rubin CPX is the first CUDA GPU designed for large-scale situational AI, and works in conjunction with Vera Rubin CPU and Rubin GPU to form the Vera Rubin NVL144 CPX platform. It provides up to 8 exaflops of AI computing power under a single rack, and is 7.5 times more efficient than the existing GB300 NVL72 system. Moreover, the system is equipped with 100TB of memory and 1.7PB of bandwidth per second, allowing data to flow at extremely high speeds and support the strict AI workload. NVIDIA also provides Rubin CPX computing trays to help customers extend the investment benefits of the existing Vera Rubin system.

In terms of performance specifications, Rubin CPX uses NVFP4 accuracy, has a computing power of 30 petaflops, and is equipped with 128GB GDDR7 memory, which can handle large-scale AI recommendations with extremely high energy efficiency. Compared with the GB300 NVL72, the Rubin CPX system has increased its focus by 3 times, allowing the AI model to handle longer situational sequences and maintain high efficiency without slowing down speed.

According to the content released by Facebook's fan page "Richard only talks about fundamentals-Richard’s Research Blog", Rubin CPX is highly flexible and can be combined with the Quantum-X800 InfiniBand horizontal expansion architecture or the Spectrum-XGS ether network platform, and matched with NVIDIA ConnectX-9 SuperNICs to meet the needs of different companies. In this regard, NVIDIA also pointed out that every USD 100 million invested in Rubin CPX can bring up a verb gain of up to USD 5 billion, showing an attractive investment report, and directly converting AI technology into visible business value.

PCIe Switch+CX8 I/O board enhances hardware performance and promotes AI-scale applicationsIn order to fully display the amazing performance of Rubin CPX, NVIDIA simultaneously launched the world's first PCIe 6.0 I/O board. This board is like the "neural center" of an AI server. It integrates multiple important chips together and simplifies the server design, so that all components can communicate with each other at ultra-high speed.

Many top AI companies have begun to adopt this technology, such as Cursor using it to speed up code generation, and Runway uses it to create movie-level long video content.

In order to support the high performance of Rubin CPX, NVIDIA simultaneously launched the PCIe Switch+CX8 I/O board. This is the world's first mass-produced PCIe 6.0 standard I/O product, integrating PCIe Switch chips and ConnectX-8 SuperNICs into the same large board, equipped with 9 PCIe slots and 8 NIC connectors.

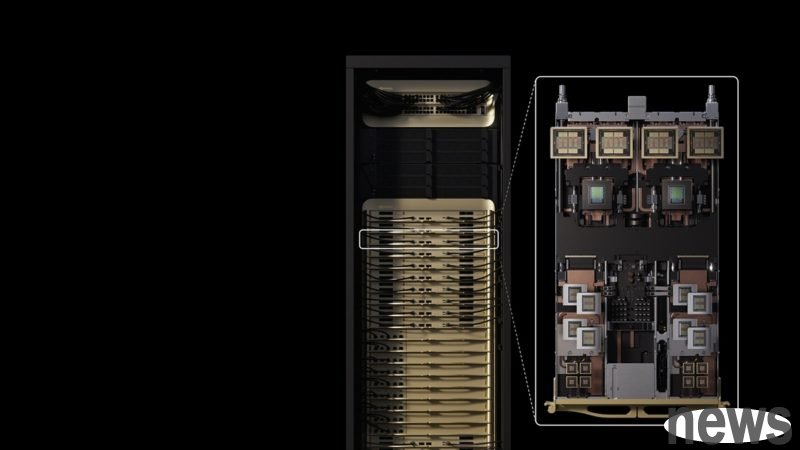

▲ NVIDIA Vera Rubin NVL144 CPX rack and bay, equipped with Rubin Context GPU (Rubin CPX), Rubin GPU and Vera CPU (Picture source: Nvidia)

This I/O board is very different from a traditional motherboard. It does not have a CPU and is specially designed for AI GPU servers that require a lot of computing power..

Its biggest feature is that the two key components of the PCIe Switch and CX8 NIC are combined into one, just like integrating the two originally divided functions to make the server design simpler and reduce the number of parts.

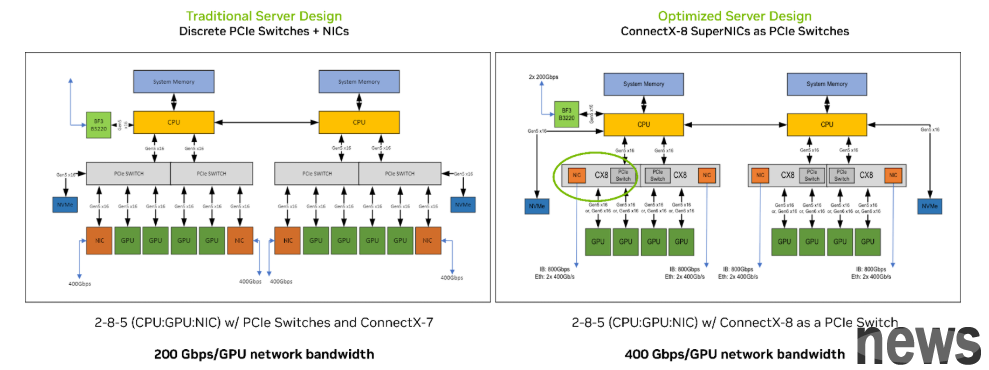

▲Comparison of traditional server design (left) and optimized server design (right) using ConnectX-8 SuperNIC (Picture source: Nvidia)

There are three main advantages of this design:

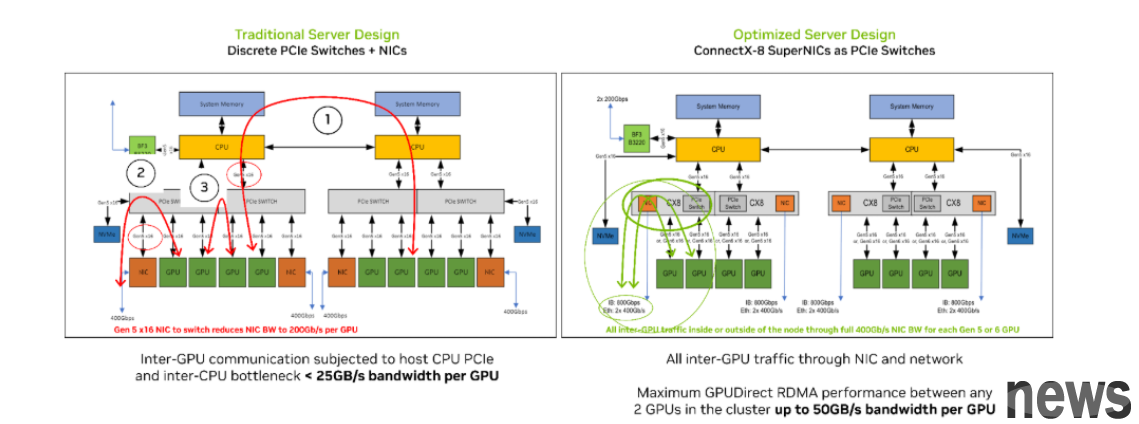

Faster speed: Due to the high integration of components, each GPU in the server can directly communicate with ultra-high speed PCIe 6.0, with a transmission speed up to 800Gb/s, greatly reducing delays. Better heat dissipation: The reduced number of small boards will make the air flow inside the server smoother, and the heat dissipation efficiency will also be improved. Lower technology: Through this board, NVIDIA simplifies the server architecture, allowing more companies to deploy and use high-efficiency AI computing systems more easily, and even partially replace the existing market

▲A comparison of the traditional server design (left) and optimized server design (right) using ConnectX-8 SuperNIC highlights three key GPU communication pathways (image source: Nvidia)

This new technology has been started to be produced and applied to NVIDIA's Rubin CPX computing system. Some leading companies in the AI field have been pioneered, such as:

Cursor: Use its powerful capabilities to accelerate "smart code generation". Runway: Used to generate long video content of "movie level". Magic: It can process billions of vocabulary, allowing AI assistants to fully understand complex software history and accelerate automation engineering. Both software and hardware are installed, and the ecological system is fully expandedIn addition to hardware breakthroughs, Rubin CPX can also fully support NVIDIA's AI technology ecosystem, including Dynamo platform, Nemotron model, and NVIDIA AI Enterprise suite that can improve efficiency.

These software tools allow enterprises to easily deploy AI applications, whether in the cloud, data center or workstation. With NVIDIA's wide developer community and over 6,000 applications, the hardware advantages of Rubin CPX will be quickly transformed into actual business value.

NVIDIA said that NVIDIA Rubin CPX is expected to be available at the end of 2026. Although there has been a while, this technology's breakthrough in long-term situational processing, system integration and investment returns has made the industry full of expectations. This is not only an innovation in a hardware architecture, but also an important step in promoting the maturity and popularization of AI applications.